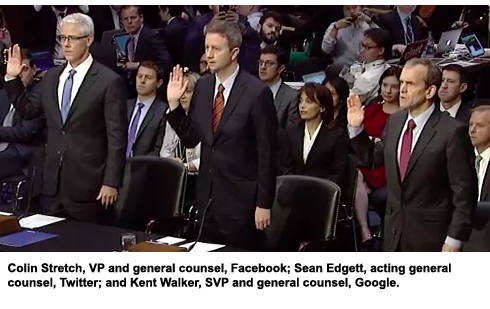

The tech titans have testified about Russian election meddling.

The tech titans have testified about Russian election meddling.

And after two days and multiple hours of questioning by members of Congress aimed at top lawyers from Facebook, Twitter and Google on Tuesday and Wednesday, it’s clear that Russian operatives continue to spread propaganda and sow social discontent using social media platforms and that said platforms hope to avoid regulation with numerous 11th-hour transparency measures.

“Given the complexity of what we’ve seen, if anyone tells you they’ve got this all figured out, they’re kidding themselves,” said Sen. Richard Burr (R-NC) on Wednesday during a hearing of the Senate Select Committee on Intelligence. “But we can’t afford to kid ourselves about what happened last year and continues to happen today.”

Propaganda Machine

Although some of the ads paid for by entities associated with the Kremlin, such as the Internet Research Agency, geotargeted specific demographic groups in key swing states in the lead-up to the presidential election, including Michigan and Wisconsin, more ads were targeted at states like Maryland that were dominated by Hillary Clinton.

Almost five times more ads were targeted at Maryland (262 ads), for example, as Wisconsin (55 ads). Of those 55 ads, 35 ran prior to the primary before a Republican candidate had even been identified, and none of the 55 ads mentioned Donald Trump by name.

In general, a greater number of the Russia-linked geotargeted ads ran in 2015 than in 2016 with the goal of exploiting American social and political divisions. The ads focused on different sides of hot-button issues, including race, immigration, religion, gun control and gay rights.

But paid advertising was and is just one tool in Russia’s propaganda arsenal.

Sen. Mark Warner (D-Va.) called it the “Russian playbook,” which hasn’t changed much since the Cold War. But modern social media tools enhance the propaganda’s efficacy.

“Today’s tools in many ways seem almost purpose-built for Russian disinformation techniques,” Warner said.

Disinformation agents populate fake accounts, groups and pages with fake content and use tools like paid ads and bots to get real people to follow, engage and share. From that point, the distribution and the amplification is organic.

Considering the Internet Research Agency spent just $100,000 to reach 146 million people across Facebook and Instagram – more than one-third of the US population – the strategy is insidiously effective.

Still Gunning For Self-Reg

After initially downplaying the abuse of their platforms by Russian agents, the platforms are ready to play ball – up to a point. Facebook, Twitter and Google have all announced political advertising-related reforms over the past month to avoid regulation.

All three have said they will begin to clearly label political ads and provide more information about who’s running the ads, who’s funding the ads and visibility into what other ads (not just political) are being run by that advertiser. They’ve all tweaked their ad policies and pledged to hire more human reviewers and invest in machine learning and AI to help them identify threats and police their platforms.

Facebook General Counsel Colin Stretch even name-dropped the Honest Ads Act during Wednesday’s hearing, noting that the new ad transparency measures Facebook announced last week purposely draw on tenets laid out in the proposed legislation.

If passed, the law would require that online political advertising be subject to the same disclosure requirements as traditional political advertising. The platforms all publicly support the Honest Ads Act, but clearly would prefer self-regulation.

But better disclosures for online paid political ads doesn’t address the distribution of fake content, and Warner, a co-sponsor of the Honest Acts Act, acknowledged this limitation.

“These ads are just the tip of a very large iceberg,” Warner said. “The real story is the amount of misinformation and divisive content that was pushed for free on Russia-backed pages which was then spread widely through the news feeds of tens of millions of Americans. I doubt that the so-called Internet Research Agency in St. Petersburg represents the only Russian trolls out there.”

A number of Warner’s Senate intel committee colleagues were similarly skeptical, and by the end of Wednesday morning’s hearing one final thing was clear: Lawmakers are not going to let this go.

“Ads are a small part of a much bigger problem: fake users posting stories on Facebook, video on YouTube and links on Twitter that can be used by foreign and domestic enemies to undermine our society,” said Sen. Ron Wyden (D-Ore.). “You need to stop paying lip service to shutting down bad actors using these accounts. You’ve got the power.”

This post was syndicated from Ad Exchanger.

More Stories

Casie Mason Joins WFIE in Evansville as AM Anchor

Here’s How Various News Outlets Will Cover Pope Francis’ Funeral

What Media Rights Deals Mean to the Future of Women’s Sports