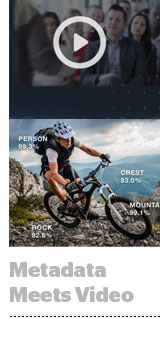

With the explosion of cross-screen TV, publishers and advertisers are clamoring for better discovery, personalization and cataloging of video content, and metadata is answering that call.

Metadata, put simply, adds more context to data. Metadata in video can range from the contents of that video (e.g., colors, products, characters) to the way it’s classified (e.g., words or phrases that enable search engines or discovery mechanisms to surface a video in the first place).

“There is certainly an opportunity for media companies to enhance their targeting capabilities using metadata, which could accelerate the adoption of advanced TV,” said Bre Rossetti, SVP of strategy and innovation at Havas. “Metadata could offer insights that go deeper than content length, program or device type to include contextual data around mood, specific characters, products or moments within a longer video.”

This year, cloud giants such as Amazon, IBM and Microsoft each invested in metadata capabilities within their video stacks, while companies like Nielsen and Grapeshot are tapping metadata to create tools for content personalization and brand safety.

While the use cases for metadata in video and TV advertising aren’t fully baked, some practical applications are starting to emerge.

The Use Case For Content Owners

Publishers and content owners who generate lots of video stand to benefit most from the proliferation of metadata.

“Deep, descriptive information about digital content will drive the next generation of search, discovery and navigation for music, movies and TV shows,” said Sherman Li, VP of video personalization for Nielsen-owned Gracenote.

“Deep, descriptive information about digital content will drive the next generation of search, discovery and navigation for music, movies and TV shows,” said Sherman Li, VP of video personalization for Nielsen-owned Gracenote.

“Information about the content type, artist, actor, season, episode number, mood, genre and era, among other things, are critical in driving personalization and playlisting for digital entertainment services.”

Some publishers are beginning with the bare minimum – tagging and classifying videos so they’re discoverable.

“Surprisingly, some major players in the space need to sort out the basics: What are my videos about, and where can I find them?” said Andrew Smith, SVP of product strategy at Grapeshot.

Once a publisher establishes a standardized taxonomy for content across video libraries, Smith said the “distribution and monetization of video is simplified by using metadata to make scalable decisions across common categories and themes.”

Publishers also have a real mandate to provide advertisers with more transparency about content that is aligned to their brand needs, said Havas’ Rossetti. But advanced analysis is a work in progress.

“As the industry works to establish how metadata is collected and assigned – image recognition, audio and video – and at what interval, we have to be realistic about what is possible,” she said. “This space is evolving rapidly, but there are still nuances that AI struggles to adopt.”

For instance, algorithms can find videos tagged with the word “Greek,” but may fail to capture the nuance between a video featuring Greek yogurt and a video with Greek pop stars.

“The challenge is that the standards for metadata don’t yet exist,” Rossetti added. “As a result, it’s difficult to scale and automate.”

The Use Case For Advertisers

Brands and advertisers will also benefit once metadata matures and becomes available programmatically.

“At a very tactical level, metadata will allow brands to better implement brand safety filters based on the context of the videos themselves,” said Jenny Schauer, VP and group director of media for Digitas’ Video Center of Excellence.

Platforms such as Openslate, Zefr and Innovid already provide contextual targeting in video. But Schauer said it’s still rare to target based on the context of a video itself, rather than the context of the text accompanying a video on a site page.

“Video metadata has the power to unlock better insights about context, which will ultimately make the advertising experience better for both the brands and the consumers,” Schauer said.

The ability to leverage metadata within programmatic video or over-the-top TV buys is still limited.

“In many cases, advertisers only have the ability to distinguish between genres versus specific programs,” Rossetti said. “As this space evolves, brands will have more visibility and control over inventory. Right now, we just don’t have enough visibility on the front end in most cases.”

Grapeshot’s Smith agreed: “Because the video ecosystem is relatively complex, [integrating] video context metadata into buy-side platforms is still in its infancy.”

Who Are The Emerging Video Metadata Players?

Who Are The Emerging Video Metadata Players?

A handful platforms and tools have emerged to make sense of the growing volume of video metadata.

Although their capabilities vary significantly, one commonality between them is that most leverage machine learning to manage or activate on metadata. Here’s a summary of their capabilities:

Amazon

Amazon launched an image, video-recognition and metadata analysis tool called Rekognition Video in November. The solution runs on Amazon Web Services and taps machine-learning algorithms to analyze video streams in real time. Rekognition Video also integrates with a developer tool called Amazon Kinesis Video Streams, which allows content owners and publishers to index and create applications from cross-screen video data.

IBM

The IBM Cloud Video business seeks to improve personalization and relevancy of video distribution and ad delivery. IBM’s Watson Media also provides metadata analysis to media companies.

Rather than “manually digging through footage to flag violence or objectionable content,” IBM Watson Media uses machine learning to process many different elements of a video at once to improve personalization of content or to safeguard against objectionable content.

IBM Watson Media can also identify important moments in a video stream quickly. For instance, the US Open used it to quickly assess tennis player movement in relation to crowd noise to create a highlight reel of top moments in the match.

Nielsen Gracenote

Nielsen bought Gracenote last December for $560 million. Gracenote, which powers content discovery and recommendations for a number of streaming music providers and consumer electronics makers, uses metadata to improve search results within Apple Music, Spotify and Comcast interfaces.

In addition to wrangling entertainment metadata, which it standardizes using content IDs across its database, Gracenote also offers computer vision or image recognition technology, which can be used in everything from self-driving cars to augmented reality.

“Metadata will also be a key enabler for voice applications,” Gracenote’s Li predicted. “The convergence of video and music databases will enable voice searches like, ‘Who played on Jimmy Kimmel last night?'”

Microsoft

Microsoft, much like IBM, offers media and entertainment companies metadata-based solutions through a business division called Azure Media Services.

Its cloud technology recognizes facial movement and includes audio detection and sentiment analysis. More recently, Microsoft rolled out a video service called Video Indexer that uses AI to reduce the manual labor involved in video stream analysis. A CPG brand might use that data to determine the top 10 product placements across the air or in digital, or to dynamically optimize ad creative to increase its contextual relevance to a program.

Google’s video metadata offering, Google Cloud Video Intelligence, lives within the Google Cloud product family, and promises to make videos “searchable, and discoverable by extracting metadata with an easy-to-use REST API.”

Like Amazon and Microsoft, Google relies on its cloud storage and infrastructure to support high volumes of video metadata analysis. But what’s the practical output of Google’s metadata offering? Ads, of course.

Google’s tool lets media companies and marketers pinpoint locations in videos to insert ads that are contextually relevant to the video content. Alternatively, media owners like CBS Interactive can use it to build a content recommendation engine based on a user’s viewing history and preferences.

This post was syndicated from Ad Exchanger.

More Stories

Warner Bros. Discovery CEO David Zaslav Receives $51.9 Million Pay Package for 2024

TikTok Fave Duolingo Boosts YouTube Shorts Viewership 430% in One Year

Streaming Ratings, Week of March 10: Disney+ Sails to the Top Courtesy of Moana 2